Update, June 25, 2024: This blog post series is now also available as a book called Fundamentals of DevOps and Software Delivery: A hands-on guide to deploying and managing production software, published by O’Reilly Media!

This is Part 3 of the Fundamentals of DevOps and Software Delivery series. In Part 1 and Part 2, you deployed an app on a single server. This is a great way to learn and to get started, and for smaller and simpler apps, a single server may be all you ever need. However, for many production use cases, where you’re building a business that depends on that app, you may run into the following problems with just a single server:

- Outages due to hardware issues

-

If the server has a hardware problem, such as the power supply dying, your users will experience an outage until you replace the server.

- Outages due to software issues

-

If the app crashes due to a software problem, such as a bug in the code, your users will experience an outage until you manually restart it.

- Outages due to load

-

If your app becomes popular enough, the load may exceed what a single server can handle, and your users will experience degraded performance, and potentially outages as well.

- Outages due to deployments

-

If you want to roll out a new version of your app, it’s hard to do so with just a single server without at least a brief outage while you shut down the old version and replace it with the new version.

In short, a single copy of your app is a single point of failure. To run applications in production, you typically want multiple copies, called replicas, of your app. Moreover, you also want a way to manage those replicas: something that can automatically handle hardware issues, software issues, load issues, deployments, and so on. Although you could build your own solutions for deploying and managing replicas, it’s a tremendous amount of work, and there are tools out there that do it for you: these are called orchestration tools.

If you search around, you’ll quickly find that there are many orchestration tools out there, including Kubernetes, EKS, GKE, AKS, OpenShift, EC2, ECS, Marathon / Mesos, Nomad, AWS Lambda, Google Cloud Functions, Azure Serverless, Capistrano, Ansible, and many others. It seems like there’s a new, hot orchestration tool nearly every day. How do you keep track of them all? Which one should you use? How do these tools compare?

This Part will help you navigate the orchestration space by introducing you to the most common types of orchestration tools, which, broadly speaking, fall into the following four categories:

-

Server orchestration: e.g., use Ansible to deploy code onto a cluster of servers.

-

VM orchestration: e.g., deploy VMs into an Auto Scaling Group.

-

Container orchestration: e.g., deploy containers into a Kubernetes cluster.

-

Serverless orchestration: e.g., deploy functions using AWS Lambda.

You’ll work through examples where you deploy the same app using each of these approaches, which will let you see how different orchestration approaches perform across a variety of dimensions (e.g., rolling out updates, load balancing, auto scaling, auto healing, and so on), so that you can pick the right tool for the job.

Here’s what we’ll cover in this post:

-

An introduction to orchestration

-

Server orchestration

-

VM orchestration

-

Container orchestration

-

Serverless orchestration

-

Comparison of orchestration options

Let’s get started by understanding exactly what orchestration is, and why it’s important.

An Introduction to Orchestration

In the world of classical music, a conductor is responsible for orchestration: that is, they direct the orchestra, coordinating all the individual members to start or stop playing, to increase or decrease the tempo, to play quieter or louder, and so on. In the world of software, an orchestration tool is responsible for orchestration: they direct software clusters, coordinating all the individual apps to start or stop, to increase or decreases the hardware resources available to them, to increase or decrease the number of replicas, and so on.

These days, for many people, the term "orchestration" is associated with Kubernetes, but the underlying needs have been around since the first programmer ran the first app for others to use. Anyone running an app in production needs to solve most or all of the following core orchestration problems:

- Deployment

-

You need a way to initially deploy one or more replicas of your app onto your servers.

- Update strategies

-

After the initial deployment, you need a way to periodically roll out updates to all replicas of your app, and in most cases, you want a way to roll out those updates without your users experiencing downtime (known as a zero-downtime deployment).

- Scheduling

-

For each deployment, you need to decide which apps should run on which servers, ensuring that each app gets the resources (CPU, memory, disk space) it needs. This is known as scheduling. With some orchestration tools, you do the scheduling yourself, manually; other orchestration tools provide a scheduler that can do it automatically, and this scheduler usually implements some sort of bin packing algorithm to try to use the resources available as efficiently as possible.

- Rollback

-

If there is a problem when rolling out an update, you need a way to roll back all replicas to a previous version.

- Auto scaling

-

As load goes up and down, you need a way to automatically scale your app up and down in response. This may include vertical scaling, where you scale the resources available to your existing servers up or down, such as getting faster CPUs, more memory, or bigger hard drives, as well as horizontal scaling, where you deploy more servers and/or more replicas of your app across your servers.

- Auto healing

-

You need something to monitor your apps, detect if they are not healthy (i.e., the app is not responding correctly or at all), and to automatically respond to problems by restarting or replacing the app or server.

- Configuration

-

If you have multiple environments (e.g., dev, stage, and prod), you need a way to be able to configure the app differently in each environment: e.g., use different domain names or different memory settings in each environment.

- Secrets management

-

You may need a way to securely pass sensitive configuration data to your apps (e.g., passwords, API keys).

- Load balancing

-

If you are running multiple replicas of your app, you may need a way to distribute traffic across all those replicas.

- Service communication

-

If you are running multiple apps, you may need to give them a way to communicate with each other, including a way to find out how to connect to other apps (service discovery), and ways to control and monitor that communication, including authentication, authorization, encryption, error handling, observability, and so on (service mesh).

- Disk management

-

If your app stores data on a local hard drive, then as you deploy replicas of your app to various servers, you need to find a way to ensure that the right hard drive is connected to the right servers.

Over the years, there have been dozens of different approaches to solving each of these problems. In the pre-cloud era, since every on-prem deployment was different, most companies wrote their own bespoke solutions, typically consisting of gluing together various scripts and tools to solve each problem. Nowadays, the industry is starting to standardize around four broad types of solutions:

- Server orchestration

-

You have a pool of servers that you manage.

- VM orchestration

-

Instead of managing servers directly, you manage VM images.

- Container orchestration

-

Instead of managing servers directly, you manage containers.

- Serverless orchestration

-

You no longer think about servers at all, and just focus on managing apps, or even individual functions.

We’ll discuss each of these in turn in the next several sections, starting with server orchestration.

Server Orchestration

The original approach used in the pre-cloud era, and one that, for better or worse, is still fairly common today, is to do the following:

-

Set up a bunch of servers.

-

Deploy your apps across the servers.

-

When you need to roll out changes, update the servers in place.

I’ve seen companies use a variety of tools for implementing this approach, including configuration management tools (e.g., Ansible, Chef, Puppet), specialized deployment scripts (e.g., Capistrano, Deployer, Mina, Fabric, Shipit), and, perhaps the most common approach, thousands and thousands of ad hoc scripts.

Because this approach pre-dates the cloud era, it also predates most attempts at creating standardized tooling for it (which is why there are so many different tools for it), and I’m not aware of any single, commonly accepted name for it. Most people would just refer to it as "deployment tooling," as deployment was the primary focus (as opposed to auto scaling, auto healing, service discovery, etc.). For the purposes of this blog post series, I’ll refer to it as server orchestration, to disambiguate it from the newer orchestration approaches you’ll see later, such as VM and container orchestration.

|

Key takeaway #1

Server orchestration is an older, mutable infrastructure approach where you have a fixed set of servers that you maintain and update in place. |

To get a feel for server orchestration, let’s use Ansible. In Part 2, you saw how to deploy a single EC2 instance using Ansible. In this post, you’ll first use Ansible to deploy multiple servers, and once you have several servers to work with, you’ll be able to see what server orchestration looks like in practice.

Example: Deploy Multiple Servers in AWS Using Ansible

|

Example Code

As a reminder, you can find all the code examples in the blog post series’s sample code repo in GitHub. |

The first thing you need for server orchestration is a bunch of servers. If you have existing servers you can use—e.g., several physical servers on-prem or several virtual servers in the cloud—and you have SSH access to those servers, you can skip this section, and go to the next one.

If you don’t have servers you can use, this section will show you how to deploy several EC2 instances using Ansible. As mentioned in Part 2, deploying and managing servers (hardware) is not really what configuration management tools were designed to do, but for learning and testing, Ansible is good enough. Note that the way you’ll use Ansible to deploy multiple EC2 instances in this section is meant to showcase server orchestration in its canonical form, with a fixed set of servers, and not the idiomatic approach for running multiple servers in the cloud; you’ll see the more idiomatic approach later in this blog post, in the VM orchestration section.

The blog post series’s sample code repo in GitHub contains an Ansible playbook called create_ec2_instances_playbook.yml (note the "s" in "instances," implying multiple instances, unlike the playbook from Part 2) in the ch3/ansible folder that can do the following:

-

Prompt you for several input variables:

-

num_instances: How many EC2 instances to create. -

base_name: What to name all the resources created by this playbook. -

http_port: What port the instances should listen on for HTTP requests.

-

-

Create multiple EC2 instances, each with the

Ansibletag set tobase_name. -

Create a security group for the instances which opens up port 22 (for SSH access) and

http_port(for HTTP access). -

Create an EC2 Key Pair you can use to connect to those instances via SSH.

To use this playbook, git clone the sample code repo, if you haven’t already (if you are new to Git, check out the

Git tutorial in Part 4):

$ git clone https://github.com/brikis98/devops-book.gitThis will check out the sample code into the devops-book folder. Next, head into the fundamentals-of-devops folder you created in Part 1 to work through the examples in this blog post series, and create a new ch3/ansible subfolder:

$ cd fundamentals-of-devops

$ mkdir -p ch3/ansible

$ cd ch3/ansibleCopy create_ec2_instances_playbook.yml from the devops-book folder into ch3/ansible:

$ cp -r ../../devops-book/ch3/ansible/create_ec2_instances_playbook.yml .To run this playbook, make sure Ansible is installed, authenticate to AWS as described in

Authenticating to AWS on the command line, and run ansible-playbook as before. Ansible will start to interactively prompt

you for input variables:

$ ansible-playbook -v create_ec2_instances_playbook.yml

How many instances to create?: 3

What to use as the base name for resources?: sample_app_instances

What port to use for HTTP requests?: 8080You can enter the values interactively and hit Enter, or, alternatively, you can define the variables in a YAML file, such as the sample-app-vars.yml file shown in Example 26:

num_instances: 3

base_name: sample_app_instances

http_port: 8080You can then use the --extra-vars flag to pass this variables file to the ansible-playbook command:

$ ansible-playbook \

-v create_ec2_instances_playbook.yml \

--extra-vars "@sample-app-vars.yml"This will create three empty servers that you can configure and manage as you wish. It’s a great playground to get a sense for server orchestration. As a first step, let’s improve the security and reliability of your app deployments, as discussed next.

Example: Deploy an App Securely and Reliably Using Ansible

As explained in Watch out for snakes: these examples have several problems, the code used to deploy apps in the previous blog posts had a number of concerns related to security and reliability issues: e.g., running the app as a root user, listening on port 80, no automatic app restart in case of crashes, and so on. It’s time to fix these issues and get this code a bit closer to something you could use in production.

First, just as in Section 2.3.2, you need to tell Ansible what servers you want to configure. You do this using either an inventory file or, if you deployed servers in the cloud, such as the EC2 instances in the previous section, you can use an inventory plugin, as shown in Example 27, to discover your servers automatically.

plugin: amazon.aws.aws_ec2

regions:

- us-east-2

keyed_groups:

- key: tags.Ansible

leading_separator: ''Just as in the previous blog post, this inventory file will create groups based on the Ansible tag.

If you used the playbook in the previous section, that tag will be set to the value you entered for the base_name

variable. In the preceding section, I used "sample_app_instances" as the base_name, so that’s what the group will be

called. You’ll need to configure group variables for this group by creating a YAML file with the name of the group in

the group_vars folder. So that will be group_vars/sample_app_instances.yml, as shown in

Example 28:

ansible_user: ec2-user

ansible_ssh_private_key_file: ansible-ch3.key

ansible_host_key_checking: falseThis file configures the user, private key, and host key checking settings for the sample_app_instances group. Now you

can use a playbook to configure the servers in this group to run the Node.js sample app. Create a new playbook called

configure_sample_app_playbook.yml, with the contents shown in

Example 29:

- name: Configure servers to run the sample-app

hosts: sample_app_instances (1)

gather_facts: true

become: true

roles:

- role: nodejs-app (2)

- role: sample-app (3)

become_user: app-user (4)Here’s what this playbook does:

| 1 | Target the sample_app_instances group you just configured in your inventory. |

| 2 | Instead of a single sample-app role that does everything, as you saw in Part 2, the

code in this blog post uses two roles. The first role, called nodejs-app, is responsible for

configuring a server to run Node.js apps. You’ll see the code for this role shortly. |

| 3 | The second role is called sample-app, and it’s responsible for running the sample app. You’ll see the code

for this role shortly as well. |

| 4 | The sample-app role will be executed as the OS user app-user, which is a user that the nodejs-app role creates,

rather than as the root user. |

Create just a single file and folder for the nodejs-app role, roles/nodejs-app/tasks/main.yml:

roles

└── nodejs-app

└── tasks

└── main.yml

Put the code shown in Example 30 into tasks/main.yml:

nodejs-app role (ch3/ansible/roles/nodejs-app/tasks/main.yml)- name: Add Node packages to yum (1)

shell: curl -fsSL https://rpm.nodesource.com/setup_21.x | bash -

- name: Install Node.js

yum:

name: nodejs

- name: Create app user (2)

user:

name: app-user

- name: Install pm2 (3)

npm:

name: pm2

version: latest

global: true

- name: Configure pm2 to run at startup as the app user

shell: eval "$(sudo su app-user bash -c 'pm2 startup' | tail -n1)"Here’s what this role does:

| 1 | Install Node.js, just as you’ve seen before. |

| 2 | Create a new OS user called app-user. This allows you to run your apps with a user with more limited permissions

than root. |

| 3 | Install PM2 and configure it to run on boot. You’ll see what PM2 is and why it’s installed shortly. |

As you can see, the nodejs-app role is fairly generic: it’s designed so you can use it with any Node.js app, which

makes this a highly reusable piece of code.

The sample-app role, on the other hand, is specifically designed to run the sample app. Create two subfolders

for this role, files and tasks:

roles

├── nodejs-app

└── sample-app

├── files

│ ├── app.config.js

│ └── app.js

└── tasks

└── main.yml

app.js is the exact same "Hello, World" Node.js sample app you saw in Part 1. Copy it into the files folder:

$ cp ../../ch1/sample-app/app.js roles/sample-app/files/app.config.js is a new file that is used to configure PM2. So, what is PM2? PM2 is a process supervisor, which is a tool you can use to run your apps, monitor them, restart them after a reboot or a crash, manage their logging, and so on. Process supervisors provide one layer of auto healing for long-running apps. You’ll see other types of auto healing later in this post.

There are many process supervisors out there, including supervisord, runit, and systemd, with systemd as the one you’re likely to use in most situations, as it’s built into most Linux distributions these days. You’ll see an example of how to use systemd later in this blog post. For this example, I picked PM2 because it has features designed specifically for Node.js apps. To use these features, create a configuration file called app.config.js, as shown in Example 31:

module.exports = {

apps : [{

name : "sample-app",

script : "./app.js", (1)

exec_mode: "cluster", (2)

instances: "max", (3)

env: {

"NODE_ENV": "production" (4)

}

}]

}This file configures PM2 to do the following:

| 1 | Run app.js to start the app. |

| 2 | Run in cluster mode, so that instead of a single Node.js process, you get one process per CPU, ensuring your app is able to take advantage of all the CPUs on your server. |

| 3 | Configure cluster mode to use all CPUs available in cluster mode. |

| 4 | Set the NODE_ENV environment variable to "production," which is how all Node.js apps and plugins know to run in

production mode rather than development mode. |

Finally, create tasks/main.yml with the contents shown in Example 32:

sample-app role’s tasks (ch3/ansible/roles/sample-app/tasks/main.yml)- name: Copy sample app (1)

copy:

src: ./

dest: /home/app-user/

- name: Start sample app using pm2 (2)

shell: pm2 start app.config.js

args:

chdir: /home/app-user/

- name: Save pm2 app list so it survives reboot (3)

shell: pm2 save| 1 | Copy the sample app code (app.js and app.config.js) from the files folder to the server. |

| 2 | Use PM2 to start the app in the background and start monitoring it. |

| 3 | Save the list of apps PM2 is running so that if the server reboots, PM2 will automatically restart those apps. |

These changes address most of the concerns in Watch out for snakes: these examples have several problems, improving your security posture (no more root user) and the reliability and performance of your app (process supervisor, cluster mode).

To try this code out, make sure you have Ansible installed, authenticate to AWS as described in Authenticating to AWS on the command line, and run the following command:

$ ansible-playbook -v -i inventory.aws_ec2.yml configure_sample_app_playbook.ymlAnsible will discover your servers, configure each one with all the dependencies it needs, and run the app on each one. At the end, you should see the IP addresses of servers, as shown in the following log output (truncated for readability):

PLAY RECAP ************************************ 13.58.56.201 : ok=9 changed=8 3.135.188.118 : ok=9 changed=8 3.21.44.253 : ok=9 changed=8 localhost : ok=6 changed=4

Copy the IP of one of the three servers, open http://<IP>:8080 in your web browser, and you should see the

familiar "Hello, World!" text once again.

While three servers is great for redundancy, it’s not so great for usability, as your users typically want just a single endpoint to hit. This requires deploying a load balancer, as described in the next section.

Example: Deploy a Load Balancer Using Ansible and Nginx

A load balancer is a piece of software that can distribute load across multiple servers or apps. You give your users a single endpoint to hit, which is the load balancer, and under the hood, the load balancer forwards the requests it receives to a number of different endpoints, using various algorithms (e.g., round-robin, hash-based, least-response-time, etc.) to process requests as efficiently as possible. There are many popular load balancer options out there, such as Apache, Nginx, and HAProxy, as well as cloud-specific load balancing services, such as AWS Elastic Load Balancer, GCP Cloud Load Balancer, and Azure Load Balancer.

In the cloud, you’d most likely use a cloud load balancer, as you’ll see later in this blog post. However, for the purposes of server orchestration, I decided to show you a simplified example of how to run your own load balancer, as server orchestration techniques should work on-prem as well. Therefore, you’ll be deploying Nginx.

To do that, you need one more server. If you have one already with SSH access, you can use it, and skip forward a few paragraphs. If not, you can deploy one more EC2 instance using the same create_ec2_instances_playbook.yml, but with a new variables file, nginx-vars.yml, with the contents shown in Example 33:

num_instances: 1

base_name: nginx_instances

http_port: 80This will create a single EC2 instance, with the base_name "nginx_instances," and it will allow requests on port 80,

which is the default port for HTTP. Run the playbook with this vars file as follows:

$ ansible-playbook \

-v create_ec2_instances_playbook.yml \

--extra-vars "@nginx-vars.yml"This should create one more EC2 instance you can use for nginx. Since the base_name for that instance is

nginx_instances, that will also be the group name in the inventory, so configure the variables for this group by

creating group_vars/nginx_instances.yml with the contents shown in Example 34:

ansible_user: ec2-user

ansible_ssh_private_key_file: ansible-ch3.key

ansible_host_key_checking: falseNow you can create a new playbook to configure these servers with Nginx. Create a new file called configure_nginx_playbook.yml with the contents shown in Example 35:

- name: Configure servers to run nginx

hosts: nginx_instances (1)

gather_facts: true

become: true

roles:

- role: nginx (2)This playbook does the following:

| 1 | Target the nginx_instances group you just configured in your inventory. |

| 2 | Configure the servers in that group using a new role called nginx, which is described next. |

Create a new folder for the nginx role with tasks and templates subfolders:

roles ├── nginx │ ├── tasks │ │ └── main.yml │ └── templates │ └── nginx.conf.j2 ├── nodejs-app └── sample-app

Inside of nginx/templates/nginx.conf.j2, create an Nginx configuration file template, as shown in Example 36:

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /run/nginx.pid;

events {

worker_connections 1024;

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

include /etc/nginx/mime.types;

default_type application/octet-stream;

upstream backend { (1)

{% for host in groups['sample_app_instances'] %} (2)

server {{ hostvars[host]['public_dns_name'] }}:8080; (3)

{% endfor %}

}

server {

listen 80; (4)

listen [::]:80;

location / { (5)

proxy_pass http://backend;

}

}

}Most of this file is standard (boilerplate) Nginx configuration—check out the Nginx documentation if you’re curious to understand what it does—so I’ll just point out a few items to focus on:

| 1 | Use the upstream keyword to define a group of servers that can be referenced elsewhere in this file by the name

backend. You’ll see where this is used shortly. |

| 2 | Use Jinja templating syntax to loop over the servers in the

sample_app_instances group. |

| 3 | Use Jinja templating syntax to configure the backend upstream to route traffic to the public address and

port 8080 of each server in the sample_app_instances group. |

| 4 | Configure Nginx to listen on port 80. |

| 5 | Configure Nginx as a load balancer, forwarding requests to the / URL to the backend upstream. |

In short, the preceding configuration file will configure Nginx to load balance traffic across the servers you deployed to run the sample app.

Create nginx/tasks/main.yml with the contents shown in Example 37:

nginx role tasks (ch3/ansible/roles/nginx/tasks/main.yml)- name: Install Nginx (1)

yum:

name: nginx

- name: Copy Nginx config (2)

template:

src: nginx.conf.j2

dest: /etc/nginx/nginx.conf

- name: Start Nginx (3)

systemd_service:

state: started

enabled: true

name: nginxThis file defines the tasks for the nginx role, which are the following:

| 1 | Install Nginx. |

| 2 | Render the Nginx configuration file and copy it to the server. |

| 3 | Start Nginx. Note the use of systemd as a process supervisor to restart Nginx in case it crashes or the server

reboots. |

Run this playbook to configure Nginx:

$ ansible-playbook -v -i inventory.aws_ec2.yml configure_nginx_playbook.ymlWait a few minutes for everything to deploy and in the end, you should see log output that looks like this:

PLAY RECAP xxx.us-east-2.compute.amazonaws.com : ok=4 changed=2 failed=0

The value on the left, "xxx.us-east-2.compute.amazonaws.com," is a domain name you can use to access the Nginx server.

If you open http://xxx.us-east-2.compute.amazonaws.com (this time with no port number, as Nginx is listening on port

80, the default port for HTTP) in your browser, you should see "Hello, World!" yet again. Each time you refresh the

page, Nginx will send that request to a different EC2 instance. Congrats, you now have a single endpoint you can give

your users, and that endpoint will automatically balance the load across multiple servers!

Example: Roll Out Updates with Ansible

So you’ve now seen how to deploy using a server orchestration tool, but what about doing an update? Some configuration

management tools support various deployment strategies (a topic you’ll learn more about in Part 5),

such as a rolling deployment, where you update your servers in batches, so some servers are always running and

serving traffic, while others are being updated. With Ansible, the easiest way to have it do a rolling update is to add

the serial parameter to configure_sample_app_playbook.yml, as shown in Example 38:

- name: Configure servers to run the sample-app

# ... (other params omitted for clarity) ...

serial: 1 (1)

max_fail_percentage: 30 (2)| 1 | Setting serial to 1 tells Ansible to apply changes to one server at a time. Since you have three servers total,

this ensures that two servers are always available to serve traffic, while one goes down briefly for an update. |

| 2 | The max_fail_percentage parameter tells Ansible to abort a deployment if more than this percent of servers hit an

error during upgrade. Setting this to 30% with three servers means that if a single server fails to update,

Ansible will not try to deploy the changes to any other servers, so you never lose more than one server to a broken

update. |

Let’s give the rolling deployment a shot. Update the text that the app responds with in app.js, as shown in Example 39:

res.end('Fundamentals of DevOps!\n');And re-run the playbook:

$ ansible-playbook -v -i inventory.aws_ec2.yml configure_sample_app_playbook.ymlYou should see Ansible rolling out the change to one server at a time. When it’s done, if you refresh the Nginx IP in your browser, you should see the text "Fundamentals of DevOps!"

|

Get your hands dirty

Here are a few exercises you can try at home to get a better feel for using Ansible for server orchestration:

|

When you’re done experimenting with Ansible, you should manually undeploy the EC2 instances by finding each one in the EC2 Console (look for the instance IDs the playbook writes to the log), clicking "Instance state," and choosing "Terminate instance" in the drop down, as shown in Figure 18. This ensures that your account doesn’t start accumulating any unwanted charges.

VM Orchestration

The idea with VM orchestration is to do the following:

-

Create VM images that have your apps and all their dependencies fully installed and configured.

-

Deploy the VM images across a cluster of servers.

-

Scale the number of servers up or down depending on your needs.

-

When you need to deploy an update, create new VM images, deploy those onto new servers, and then undeploy the old servers.

This is a slightly more modern approach that works best with cloud providers such as AWS, GCP, and Azure, where the servers are all virtual servers, so you can spin up new ones and tear down old ones in minutes. That said, you can also use virtualization on-prem with tools from VMWare, Citrix, Microsoft Hyper-V, and so on. We’ll take a look at a VM orchestration example using AWS, but be aware that the basic techniques here apply to most VM orchestration tools, whether in the cloud or on-prem.

|

Key takeaway #2

VM orchestration is an immutable infrastructure approach where you deploy and manage VM images across virtualized servers. |

To get a feel for VM orchestration let’s go through an example. This requires the following three things:

-

A tool for building VM images: Just as in Part 2, you’ll use Packer to create VM images for AWS.

-

A tool for orchestrating VMs: This blog post series primarily uses AWS, so you’ll use AWS Auto Scaling Groups.

-

A tool for managing your infrastructure as code: Instead of setting up Auto Scaling Groups by manually clicking around, you’ll use the same IaC tool as in Part 2, OpenTofu, to manage your infrastructure as code.

We’ll start with the first item, building VM images.

Example: Build a VM Image Using Packer

Head into the fundamentals-of-devops folder you created in Part 1 to work through the examples in this blog post series, and create a new subfolder for the Packer code:

$ cd fundamentals-of-devops

$ mkdir -p ch3/packer

$ cd ch3/packerCopy the Packer template you created in Part 2 into the new ch3/packer folder:

$ cp ../../ch2/packer/sample-app.pkr.hcl .You should also copy app.js (the sample app) and app.config.js (the PM2 configuration file) from the server orchestration section of this blog post into the ch3/packer folder:

$ cp ../ansible/roles/sample-app/files/app*.js .Example 40 shows the updates to make to the Packer template:

app-user (ch3/packer/sample-app.pkr.hcl)build {

sources = [

"source.amazon-ebs.amazon_linux"

]

provisioner "file" { (1)

sources = ["app.js", "app.config.js"]

destination = "/tmp/"

}

provisioner "shell" {

inline = [

"curl -fsSL https://rpm.nodesource.com/setup_21.x | sudo bash -",

"sudo yum install -y nodejs",

"sudo adduser app-user", (2)

"sudo mv /tmp/app.js /tmp/app.config.js /home/app-user/", (3)

"sudo npm install pm2@latest -g", (4)

"eval \"$(sudo su app-user bash -c 'pm2 startup' | tail -n1)\"" (5)

]

pause_before = "30s"

}

}The main changes are to make security and reliability improvements similar to the ones you did in the server

orchestration section: that is, use PM2 as a process supervisor and create app-user to run the app (instead of using

the root user).

| 1 | Copy two files, app.js and app.config.js, onto the server (into the /tmp folder, as the final destination,

the home folder of app-user, doesn’t exist until a later step). |

| 2 | Create app-user. This will also automatically create a home folder for app-user. |

| 3 | Move app.js and app.config.js from the /tmp folder to the home folder of app-user. |

| 4 | Install PM2. |

| 5 | Configure PM2 to run on boot (as app-user) so if your server ever restarts, PM2 will restart your app. |

To build the AMI, make sure Packer is installed, authenticate to AWS as described in Authenticating to AWS on the command line, and run the following commands:

$ packer init sample-app.pkr.hcl

$ packer build sample-app.pkr.hclWhen the build is done, Packer will output the ID of the newly created AMI. Make sure to jot this ID down somewhere, as you’ll need it shortly.

Example: Deploy a VM Image in an Auto Scaling Group Using OpenTofu

The next step is to deploy the AMI. In Part 2, you used OpenTofu to deploy an AMI on a single EC2 instance. The goal now is to see VM orchestration at play, which means deploying multiple servers, or what’s sometimes called a cluster. Most cloud providers offer a native way to run VMs across a cluster: for example, AWS offers Auto Scaling Groups (ASG), GCP offers Managed Instance Groups, and Azure offers Scale Sets. For this example, you’ll be using an AWS ASG, as that offers a number of nice features:

- Cluster management

-

ASGs make it easy to launch multiple instances and manually resize the cluster.

- Auto scaling

-

You can also configure the ASG to resize the cluster automatically in response to load.

- Auto healing

-

The ASG monitors all the instances in the cluster and automatically replaces any instance that crashes.

Let’s use a reusable OpenTofu module called asg from this blog post series’s

sample code repo to deploy an ASG. You can find the module in the ch3/tofu/modules/asg

folder. This is a simple module that creates three main resources:

-

A launch template, which is a bit like a blueprint that specifies the configuration to use for each EC2 instance.

-

An ASG which uses the configuration in the launch template to stamp out EC2 instances. The ASG will deploy these instances into the Default VPC. See the note on Default VPCs in A Note on Default Virtual Private Clouds.

-

A security group which controls the traffic that can go in and out of each EC2 instance.

|

A Note on Default Virtual Private Clouds

All the AWS examples in the early parts of this blog post series use the Default VPC in your AWS account. A VPC, or virtual private cloud, is an isolated area of your AWS account that has its own virtual network and IP address space. Just about every AWS resource deploys into a VPC. If you don’t explicitly specify a VPC, the resource will be deployed into the Default VPC, which is part of every AWS account created after 2013 (if you deleted your Default VPC, you can create a new Default VPC using the VPC Console). It’s not a good idea to use the Default VPC for production apps, but it’s OK to use it for learning and testing. In Part 7 [coming soon], you’ll learn more about VPCs, including how to create a custom VPC that you can use for production apps instead of the Default VPC. |

To use the asg module, create a live/asg-sample folder to act as a root module:

$ cd fundamentals-of-devops

$ mkdir -p ch3/tofu/live/asg-sample

$ cd ch3/tofu/live/asg-sampleInside the asg-sample folder, create a main.tf file with the initial contents shown in Example 41:

asg module (ch3/tofu/live/asg-sample/main.tf)provider "aws" {

region = "us-east-2"

}

module "asg" {

source = "github.com/brikis98/devops-book//ch3/tofu/modules/asg"

name = "sample-app-asg" (1)

# TODO: fill in with your own AMI ID!

ami_id = "ami-0f5b3d9c244e6026d" (2)

user_data = filebase64("${path.module}/user-data.sh") (3)

app_http_port = 8080 (4)

instance_type = "t2.micro" (5)

min_size = 1 (6)

max_size = 10 (7)

desired_capacity = 3 (8)

}The preceding code sets the following parameters:

| 1 | name: The name to use for the launch template, ASG, security group, and all other resources created by the module. |

| 2 | ami_id: The AMI to use for each EC2 instance. You’ll need to set this to the ID of the AMI you built from the

Packer template in the previous section. |

| 3 | user_data: The user data script to run on each instance during boot. The contents of user-data.sh are shown in

Example 42. |

| 4 | app_http_port: The port to open in the security group to allow the app to receive HTTP requests. |

| 5 | instance_type: The type of instances to run in the ASG. |

| 6 | min_size: The minimum number of instances to run in the ASG. |

| 7 | max_size: The maximum number of instances to run in the ASG. |

| 8 | desired_capacity: The initial number of instances to run in the ASG. |

Create a file called user-data.sh with the contents shown in Example 42:

#!/usr/bin/env bash

set -e

sudo su app-user (1)

cd /home/app-user (2)

pm2 start app.config.js (3)

pm2 save (4)This user data script does the following:

| 1 | Switch to app-user. |

| 2 | Go into the home folder for app-user, which is where the Packer template copied the sample app code. |

| 3 | Use PM2 to run the sample app. |

| 4 | Save the running app status so that if the server reboots, PM2 will restart the sample app. |

If you were to run apply right now, you’d get an ASG with three EC2 instances running your sample app. While this is

great for redundancy, as discussed in the server orchestration section, you typically want to give your users just a

single endpoint to hit. This requires deploying a load balancer, as described in the next section.

Example: Deploy an Application Load Balancer Using OpenTofu

In the server orchestration section, you deployed your own load balancer using Nginx. This is a simplified deployment that works fine for an example, but has a number of drawbacks if you try to use it for production apps:

- Availability

-

You are running only a single instance for your load balancer. If it crashes, your users experience an outage.

- Scalability

-

If load exceeds what a single server can handle, users will see degraded performance or an outage.

- Maintenance

-

Keeping the load balancer up to date is entirely up to you. Moreover, when you need to update the load balancer itself (e.g., update to a new version of Nginx), it’s tricky to do so without downtime.

- Security

-

The load balancer server is not especially hardened against attacks.

- Encryption

-

If you want to encrypt data in transit (e.g., use HTTPS and TLS)—which you should for just about all production use cases—you’ll have to set it all up manually (you’ll learn more about encryption in Part 8 [coming soon]).

To be clear, there’s nothing wrong with Nginx: if you put the work in, there are ways to address all of these issues with Nginx. However, it’s a considerable amount of work. One of the big benefits of the cloud is that most cloud providers offer managed services that can do this work for you. Load balancing is a very common problem, and as I mentioned before, almost every cloud provider offers a managed service for load balancing, such as AWS Elastic Load Balancer, GCP Cloud Load Balancer, and Azure Load Balancer. All of these provide a number of powerful features out-of-the-box. For example, the AWS Elastic Load Balancer (ELB) gives you the following:

- Availability

-

Under the hood, AWS automatically deploys multiple servers for an ELB so you don’t get an outage if one server crashes.

- Scalability

-

AWS monitors load on the ELB, and if it is starting to exceed capacity, AWS automatically deploys more servers.

- Maintenance

-

AWS automatically keeps the load balancer up to date, with zero downtime.

- Security

-

AWS load balancers are hardened against a variety of attacks, including meeting the requirements of a variety of security standards (e.g., SOC 2, ISO 27001, HIPAA, PCI, FedRAMP) out-of-the-box.[15]

- Encryption

-

AWS has out-of-the-box support for HTTPS, Mutual TLS, TLS Offloading, auto-rotated TLS certs, and more.

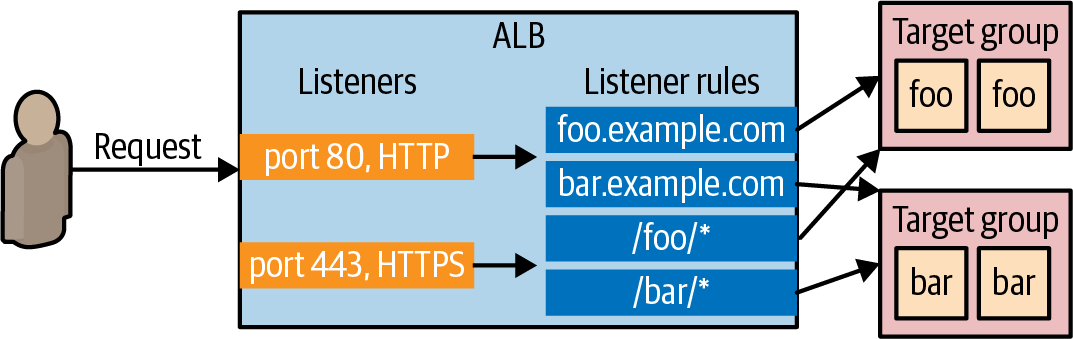

Using a managed service for load balancing can be a huge time saver, so let’s use an AWS load balancer. There are actually several types of AWS load balancers to choose from; the one that’ll be the best fit for the simple sample app is the Application Load Balancer (ALB). The ALB consists of several parts, as shown in Figure 24:

- Listener

-

Listen for requests on a specific port (e.g., 80) and protocol (e.g., HTTP).

- Listener rule

-

Specify which requests that come into a listener to route to which target group based on rules that match on request parameters such as the path (e.g.,

/fooand/bar) and hostname (e.g.,foo.example.comandbar.example.com). - Target groups

-

One or more servers that receive requests from the load balancer. The target group also performs health checks on these servers by sending each server a request on a configurable interval (e.g., every 30 seconds), and only considering the server as healthy if it returns an expected response (e.g., a 200 OK) within a configurable time period (e.g., within 2 seconds). The target group will only send requests to servers that pass its health checks.

The blog post series’s sample code repo includes a module called alb in the ch3/tofu/modules/alb folder that you

can use to deploy an ALB. Note that this is a very simple ALB—it deploys into the Default VPC (see the note in

A Note on Default Virtual Private Clouds) and only has a single listener rule where it forwards all requests to a single target group—but it

should suffice for our purposes in this blog post.

Example 43 shows how to update the asg-sample module to use the alb module:

alb module (ch3/tofu/live/asg-sample/main.tf)module "asg" {

source = "github.com/brikis98/devops-book//ch3/tofu/modules/asg"

# ... (other params omitted) ...

}

module "alb" {

source = "github.com/brikis98/devops-book//ch3/tofu/modules/alb"

name = "sample-app-alb" (1)

alb_http_port = 80 (2)

app_http_port = 8080 (3)

app_health_check_path = "/" (4)

}The preceding code sets the following parameters on the alb module:

| 1 | name: The name to use for the ALB, target group, security group, and all other resources created by the module. |

| 2 | alb_http_port: The port the ALB (the listener) listens on for HTTP requests. |

| 3 | app_http_port: The port the app listens on for HTTP requests. The ALB target group will send traffic and health

checks to this port. |

| 4 | app_health_check_path: The path to use when sending health check requests to the app. |

The one missing piece is the connection between the ASG and the ALB: that is, how does the ALB know which EC2 instances

to send traffic to (which instances to put in its target group)? To tie these pieces together, go back to your usage

of the asg module, and update it with one parameter, as shown in Example 44:

asg module (ch3/tofu/live/asg-sample/main.tf)module "asg" {

source = "github.com/brikis98/devops-book//ch3/tofu/modules/asg"

# ... (other params omitted) ...

target_group_arns = [module.alb.target_group_arn]

}The preceding code sets the target_group_arns parameter, which will change the ASG behavior in two ways:

-

First, it’ll configure the ASG to register all of its instances in the specified target group, including the initial set of instances when you first launch the ASG, as well as any new instances that launch later, either as a result of a deployment or auto healing or auto scaling.

-

Second, it’ll configure the ASG to use the ALB for health checks and auto healing. By default, the auto healing feature in the ASG is simple: it replaces any instance that has crashed (a hardware issue). However, if the instance is still running, and it’s the app that crashed or stopped responding (a software issue), the ASG won’t know to replace it. Configuring the ASG to use the ALB for health checks tells the ASG to replace an instance if it fails the load balancer’s health check, which gives you more robust auto healing, as the load balancer health check will detect both hardware and software issues.

The final change to the asg-sample module is to add the load balancer’s domain name as an output variable in

outputs.tf, as shown in Example 45:

output "alb_dns_name" {

value = module.alb.alb_dns_name

}To deploy the module, make sure OpenTofu is installed, authenticate to AWS as described in Authenticating to AWS on the command line, and run the following commands:

$ tofu init

$ tofu applyAfter a few minutes, everything should be deployed, and you should see the ALB domain name as an output:

Apply complete! Resources: 10 added, 0 changed, 0 destroyed. Outputs: alb_dns_name = "sample-app-tofu-656918683.us-east-2.elb.amazonaws.com"

Open this domain name up in your web browser, and you should see "Hello, World!" once again. Congrats, you now have a single endpoint, the load balancer domain name, that you can give your users, and when users hit it, the load balancer will distribute their requests across all your apps in your ASG!

Example: Roll Out Updates with OpenTofu and Auto Scaling Groups

You’ve seen the initial deployment with VM orchestration, but what about rolling out updates? Most of the VM

orchestration tools have support for zero-downtime deployments and various deployment strategies. For example, the

ASGs in AWS have native support for a feature called

instance refresh, which can update

your instances automatically by doing a rolling deployment. Example 46 shows how to enable

instance refresh in the asg module:

module "asg" {

source = "github.com/brikis98/devops-book//ch3/tofu/modules/asg"

# ... (other params omitted) ...

instance_refresh = {

min_healthy_percentage = 100 (1)

max_healthy_percentage = 200 (2)

auto_rollback = true (3)

}

}The preceding code sets the following parameters:

| 1 | min_healthy_percentage: Setting this to 100% means that the cluster will never have fewer than the desired number

of instances (initially, three), even during deployment. Whereas with server orchestration, you updated instances in

place, with VM orchestration, you’ll deploy new instances, as per the next parameter. |

| 2 | max_healthy_percentage: Setting this to 200% means that to deploy updates, the cluster will deploy totally new

instances, up to twice the original size of the cluster, wait for the new instances to pass health checks, and then

undeploy the old instances. So if you started with three instances, then during deployment, you’ll go up to six

instances, with three new and three old, and when the new instances pass health checks, you’ll go back to three

instances by undeploying the three old ones. |

| 3 | auto_rollback: If something goes wrong during deployment, and the new instances fail to pass health checks, this

setting will automatically initiate a rollback, putting your cluster back to its previous working condition. |

Run apply one more time to enable the instance refresh setting. Once that’s done, you can try rolling out a change.

For example, update app.js in the packer folder to respond with "Fundamentals of DevOps!", as shown in

Example 47:

res.end('Fundamentals of DevOps!\n');Next, build a new AMI using Packer:

$ packer build sample-app.pkr.hclThis will give you a new AMI ID. Update the ami_id in the asg-sample module to this new ID and run apply one

more time. You should then see a plan output that looks something like this (truncated for readability):

$ tofu apply

OpenTofu will perform the following actions:

# aws_autoscaling_group.sample_app will be updated in-place

~ resource "aws_autoscaling_group" "sample_app" {

# (27 unchanged attributes hidden)

~ launch_template {

id = "lt-0bc25ef067814e3c0"

name = "sample-app-tofu20240414163932598800000001"

~ version = "1" -> (known after apply)

}

# (3 unchanged blocks hidden)

}

# aws_launch_template.sample_app will be updated in-place

~ resource "aws_launch_template" "sample_app" {

~ image_id = "ami-0f5b3d9c244e6026d" -> "ami-0d68b7b6546331281"

~ latest_version = 1 -> (known after apply)

# (10 unchanged attributes hidden)

}This plan output shows that launch template has changed, due to the new AMI ID, and as a result, the version of the

launch template used in the ASG has changed. This will result in an instance refresh. Type in yes, hit Enter, and

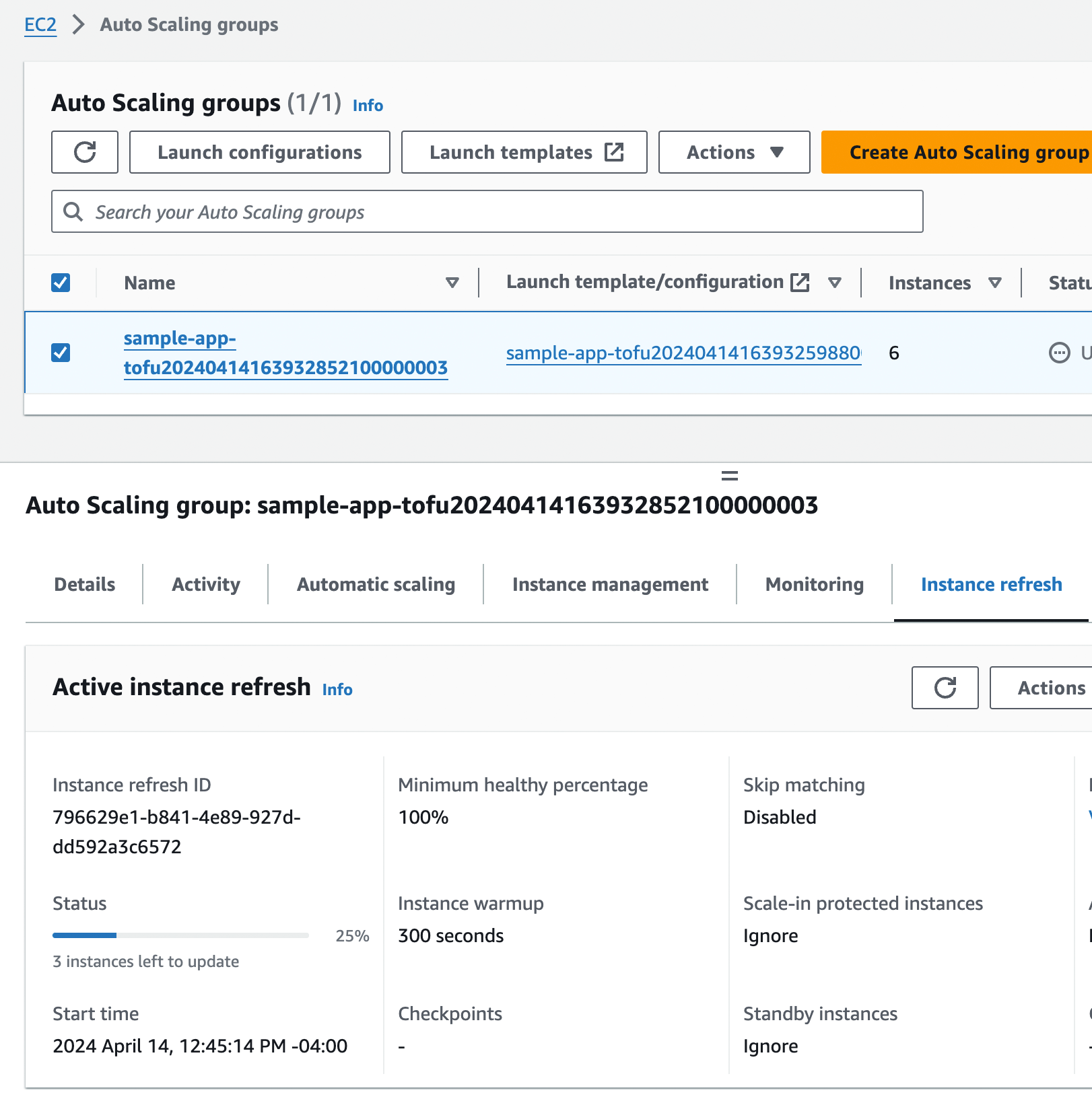

AWS will kick off the instance refresh process in the background. If you go to the EC2 Console, click Auto Scaling

Groups in the left nav, find your ASG, and click the "Instance refresh" tab, you should be able to see the instance

refresh in progress, as shown in Figure 25.

During this process, the ASG will launch three new EC2 instances, and the ALB will start performing health checks. Once the new instances start to pass health checks, the ASG will undeploy the old one instances, leaving you with just the three new instances running the new code. The whole process should take around five minutes.

During this deployment, the load balancer URL should always return a successful response, as this is a zero-downtime deployment. You can even check this by opening a new terminal tab, and running the following Bash one-liner:

$ while true; do curl http://<load_balancer_url>; sleep 1; doneThis code runs curl, an HTTP client, in a loop, hitting your ALB once per second and allowing you to see the

zero-downtime deployment in action. For the first couple minutes, you should see only responses from the old instances:

"Hello, World!" Then, as new instances start to pass health checks, the ALB will begin sending traffic to them, and you

should see the response from the ALB alternate between "Hello, World!" and "Fundamentals of DevOps!" After another couple

minutes, the "Hello, World!" message will disappear, and you’ll see only "Fundamentals of DevOps!", which means all the

old instances have been shut down. The output will look something like this:

Hello, World! Hello, World! Hello, World! Hello, World! Hello, World! Hello, World! Fundamentals of DevOps! Hello, World! Fundamentals of DevOps! Hello, World! Fundamentals of DevOps! Hello, World! Fundamentals of DevOps! Hello, World! Fundamentals of DevOps! Hello, World! Fundamentals of DevOps! Fundamentals of DevOps! Fundamentals of DevOps! Fundamentals of DevOps! Fundamentals of DevOps! Fundamentals of DevOps!

Congrats, you’ve now seen VM orchestration in action, including rolling out changes following immutable infrastructure practices!

|

Get your hands dirty

Here are a few exercises you can try at home to get a better feel for using OpenTofu for VM orchestration:

|

When you’re done experimenting with the ASG, run tofu destroy to undeploy all your infrastructure. This ensures that

your account doesn’t start accumulating any unwanted charges.

Container Orchestration

The idea with container orchestration is to do the following:

-

Create container images that have your apps and all their dependencies fully installed and configured.

-

Deploy the container images across a cluster of servers, with potentially multiple containers per server, packed in as efficiently as possible (bin packing).

-

Automatically scale the number of servers or the number of containers up or down depending on load.

-

When you need to deploy an update, create new container images, deploy them into the cluster, and then undeploy the old containers.

Although containers have been around for decades[16], container orchestration started to explode in popularity around 2013, with the emergence of Docker, a tool for building, running, and sharing containers, and Kubernetes, a container orchestration tool. The reason for this popularity is that containers and container orchestration offer a number of advantages over VMs and VM orchestration:

- Speed

-

Containers typically build faster than VMs, especially with caching. Moreover, container orchestration tools typically deploy containers faster than VMs. So the build & deploy cycle with containers can be considerably faster: you can expect 10-20 minutes for VMs, but just 1-5 minutes for containers.

- Efficiency

-

Most container orchestration tools have a built-in scheduler to decide which servers in your cluster should run which containers, using bin packing algorithms to use the available resources as efficiently as possible.[17]

- Portability

-

You can run containers and container orchestration tools everywhere, including on-prem and in all the major cloud providers. Moreover, the most popular container tool, Docker, and container orchestration tool, Kubernetes, are both open source. All of this means that using containers reduces lock-in to any single vendor.

- Local development

-

You can also run containers and container orchestration tools in your own local dev environment, as containers typically have reasonable file sizes, boot quickly, and have little CPU/memory overhead. This is a huge boon to local development, as you could now realistically run your entire tech stack—e.g., Kubernetes and Docker containers for multiple services—completely locally. While it’s possible to run VMs locally too, VM images tend to be considerably bigger, slower to boot, and have more CPU and memory overhead, so using them for local development is relatively uncommon; moreover, there is no practical way to run most VM orchestration tools locally: e.g., there’s no way to deploy an AWS ASG on your own computer.

- Functionality

-

Container orchestration tools solved more orchestration problems out-of-the-box than VM orchestration tools. For example, Kubernetes has built-in solutions for deployment, updates, auto scaling, auto healing, configuration, secrets management, service discovery, and disk management.

|

Key takeaway #3

Container orchestration is an immutable infrastructure approach where you deploy and manage container images across a cluster of servers. |

There are many container tools out there, including Docker, Moby, CRI-O, Podman, runc, and buildkit. Likewise, there are many container orchestration tools out there, including Kubernetes, Nomad, Docker Swarm, Amazon ECS, Marathon / Mesos, and OpenShift. The most popular, by far, are Docker and Kubernetes—so much so their names are nearly synonymous with containers and container orchestration, respectively—so that’s what we’ll focus on in this blog post series.

In the next several sections, you’ll learn to use Docker, followed by Kubernetes, and finally, you’ll learn to use Docker and Kubernetes in AWS. Let’s get into it!

Example: A Crash Course on Docker

As you may remember from Section 2.4, Docker images are like self-contained "snapshots" of the operating system (OS), the software, the files, and all other relevant details. Let’s now see Docker in action.

First, if you don’t have Docker installed already, follow the instructions on the

Docker website to install Docker Desktop for your operating system. Once it’s

installed, you should have the docker command available on your command line. You can use the docker run command to

run Docker images locally:

$ docker run <IMAGE> [COMMAND]where IMAGE is the Docker image to run and COMMAND is an optional command to execute. For example, here’s how you

can run a Bash shell in an Ubuntu 24.04 Docker image (note that the following command includes the -it flag so you

get an interactive shell where you can type):

$ docker run -it ubuntu:24.04 bash

Unable to find image 'ubuntu:24.04' locally

24.04: Pulling from library/ubuntu

Digest: sha256:3f85b7caad41a95462cf5b787d8a04604c

Status: Downloaded newer image for ubuntu:24.04

root@d96ad3779966:/#And voilà, you’re now in Ubuntu! If you’ve never used Docker before, this can seem fairly magical. Try running some commands. For example, you can look at the contents of /etc/os-release to verify you really are in Ubuntu:

root@d96ad3779966:/# cat /etc/os-release PRETTY_NAME="Ubuntu 24.04 LTS" NAME="Ubuntu" VERSION_ID="24.04" (...)

How did this happen? Well, first, Docker searches your local filesystem for the ubuntu:24.04 image. If you don’t

have that image downloaded already, Docker downloads it automatically from Docker Hub, which

is a Docker Registry that contains shared Docker images. The ubuntu:24.04 image happens to be a public Docker

image—an official one maintained by the Docker team—so you’re able to download it without any authentication. It’s also

possible to create private Docker images that only certain authenticated users can use, as you’ll see later in this

blog post.

Once the image is downloaded, Docker runs the image, executing the bash command, which starts an interactive Bash

prompt, where you can type. Try running the ls command to see the list of files:

root@d96ad3779966:/# ls -al total 56 drwxr-xr-x 1 root root 4096 Feb 22 14:22 . drwxr-xr-x 1 root root 4096 Feb 22 14:22 .. lrwxrwxrwx 1 root root 7 Jan 13 16:59 bin -> usr/bin drwxr-xr-x 2 root root 4096 Apr 15 2020 boot drwxr-xr-x 5 root root 360 Feb 22 14:22 dev drwxr-xr-x 1 root root 4096 Feb 22 14:22 etc drwxr-xr-x 2 root root 4096 Apr 15 2020 home lrwxrwxrwx 1 root root 7 Jan 13 16:59 lib -> usr/lib drwxr-xr-x 2 root root 4096 Jan 13 16:59 media (...)

You might notice that’s not your filesystem. That’s because Docker images run in containers that are isolated at the userspace level: when you’re in a container, you can only see the filesystem, memory, networking, etc., in that container. Any data in other containers, or on the underlying host operating system, is not accessible to you, and any data in your container is not visible to those other containers or the underlying host operating system. This is one of the things that makes Docker useful for running applications: the image format is self-contained, so Docker images run the same way no matter where you run them, and no matter what else is running there.

To see this in action, write some text to a test.txt file as follows:

root@d96ad3779966:/# echo "Hello, World!" > test.txt

Next, exit the container by hitting Ctrl-D, and you should be back in your original command prompt on your underlying host OS. If you try to look for the test.txt file you just wrote, you’ll see that it doesn’t exist: the container’s filesystem is totally isolated from your host OS.

Now, try running the same Docker image again:

$ docker run -it ubuntu:24.04 bash

root@3e0081565a5d:/#Notice that this time, since the ubuntu:24.04 image is already downloaded, the container starts almost instantly.

This is another reason Docker is useful for running applications: unlike virtual machines, containers are lightweight,

boot up quickly, and incur little CPU or memory overhead.

You may also notice that the second time you fired up the container, the command prompt looked different. That’s

because you’re now in a totally new container; any data you wrote in the previous one is no longer accessible to you.

Run ls -al and you’ll see that the test.txt file does not exist. Containers are isolated not only from the host OS

but also from each other.

Hit Ctrl-D again to exit the container, and back on your host OS, run the docker ps -a command:

$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS

3e0081565a5d ubuntu:24.04 "bash" 5 min ago Exited (0) 16 sec ago

d96ad3779966 ubuntu:24.04 "bash" 14 min ago Exited (0) 5 min agoThis will show you all the containers on your system, including the stopped ones (the ones you exited). You can start

a stopped container again by using the docker start <ID> command, setting ID to an ID from the CONTAINER ID column

of the docker ps output. For example, here is how you can start the first container up again (and attach an

interactive prompt to it via the -ia flags):

$ docker start -ia d96ad3779966

root@d96ad3779966:/#You can confirm this is really the first container by outputting the contents of test.txt:

root@d96ad3779966:/# cat test.txt Hello, World!

Hit Ctrl-D once more to exit the container and get back to your host OS.

Now that you’ve seen the basics of Docker, let’s look at what it takes to create your own Docker images, and use them to run web apps.

Example: Create a Docker Image for a Node.js app

Let’s see how a container can be used to run a web app: in particular, the Node.js sample app you’ve been using throughout this blog post series. Create a new folder called docker:

$ cd fundamentals-of-devops

$ mkdir -p ch3/docker

$ cd ch3/dockerCopy app.js from the server orchestration section into the docker folder (note: you do not need to copy app.config.js this time):

$ cp ../ansible/roles/sample-app/files/app.js .Next, create a file called Dockerfile, with the contents shown in Example 48:

(1)

FROM node:21.7

(2)

WORKDIR /home/node/app

(3)

COPY app.js .

(4)

EXPOSE 8080

(5)

USER node

(6)

CMD ["node", "app.js"]Just as you used a Packer template to define how to build a VM image for your sample app, this Dockerfile is a template that defines how to build a Docker image for your sample app. This Dockerfile does the following:

| 1 | It starts with the official Node.js Docker image from Docker Hub as the base. One of the advantages of Docker is that it’s easy to share Docker images, so instead of having to figure out how to install Node.js yourself, you can use the official image, which is maintained by the Node.js team. |

| 2 | Set the working directory for the rest of the build. |

| 3 | Copy app.js into the Docker image. |

| 4 | This tells the Docker image to advertise that the app within it will listen on port 8080. When someone uses your

Docker image, they can use the information from EXPOSE to figure out which ports they wish to expose. You’ll see

an example of this shortly. |

| 5 | Use the node user (created as part of the official Node.js Docker image) instead of the root user when running

this app. |

| 6 | When you run the Docker image, this will be the default command that it executes. Note that you typically do not

need to use a process supervisor for Docker images, as Docker orchestration tools take care of process supervision,

resource usage (e.g., CPU, memory), and so on, automatically. Also note that just about all container

orchestration tools expect your containers to run apps in the "foreground," blocking until they exit, and logging

directly to stdout and stderr. |

To build a Docker image from this Dockerfile, use the docker build command:

$ docker build -t sample-app:v1 .The -t flag is the tag (name) to use for the Docker image: the preceding code sets the image name to "sample-app" and

the version to "v1." Later on, if you make changes to the sample app, you’ll be able to build a new Docker image and

give it a new version, such as "v2." The dot (.) at the end tells docker build to run the build in the current

directory (which should be the folder that contains your Dockerfile). When the build finishes, you can use the

docker run command to run your new image:

$ docker run --init sample-app:v1

Listening on port 8080Note the use of --init: Node.js doesn’t handle kernel signals (such as Ctrl+C) properly, so this is necessary to

ensure that the app will exit correctly if you hit Ctrl+C. See

Docker and

Node.js best practices for more information, including other practices that you should use with Node.js Docker images.

Your app is now listening on port 8080! However, if you open a new terminal on your host operating system and try to access the sample app, it won’t work:

$ curl localhost:8080

curl: (7) Failed to connect to localhost port 8080: Connection refusedWhat’s the problem? Actually, it’s not a problem but a feature! Docker containers are isolated from the host operating

system and other containers, not only at the filesystem level but also in terms of networking. So while the container

really is listening on port 8080, that is only on a port inside the container, which isn’t accessible on the host OS.

If you want to expose a port from the container on the host OS, you have to do it via the -p flag.

First, hit Ctrl-C to shut down the sample-app container: note that it’s Ctrl-C this time, not Ctrl-D, as you’re

shutting down a process, rather than exiting an interactive prompt. Now rerun the container but this time with the

-p flag as follows:

$ docker run -p 8080:8080 --init sample-app:v1

Listening on port 8080Adding -p 8080:8080 to the command tells Docker to expose port 8080 inside the container on port 8080 of the host OS.

You know to use port 8080 here, as you built this Docker image yourself, but if this was someone else’s image, you

could use docker inspect on the image, and that will tell you about any ports that image labeled with EXPOSE.

In another terminal on your host OS, you should now be able to see the sample app working:

$ curl localhost:8080

Hello, World!Congrats, you now know how to run a web app locally using Docker! However, while using docker run directly is fine

for local testing and learning, it’s not the way you’d run Dockerized apps in production. For that, you typically want

to use a container orchestration tool such as Kubernetes, which is the topic of the next section.

|

Cleaning Up Containers

Every time you run |

Example: Deploy a Dockerized App with Kubernetes

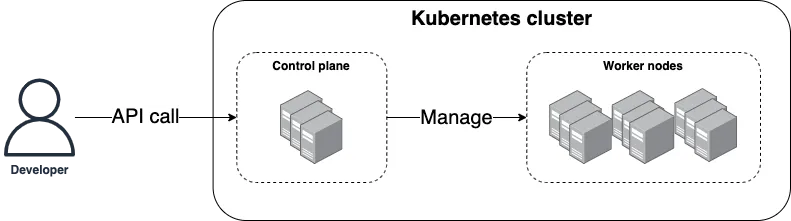

Kubernetes is a container orchestration tool, which means it’s a platform for running and managing containers on your servers, including scheduling, auto healing, auto scaling, load balancing, and much more. Under the hood, Kubernetes consists of two main pieces, as shown in Figure 26:

- Control plane

-

The control plane is responsible for managing the Kubernetes cluster. It is the "brains" of the operation, responsible for storing the state of the cluster, monitoring containers, and coordinating actions across the cluster. It also runs the API server, which provides an API you can use from command-line tools (e.g.,

kubectl), web UIs (e.g., the Kubernetes Dashboard), and IaC tools (e.g., OpenTofu) to control what’s happening in the cluster. - Worker nodes

-

The worker nodes are the servers used to actually run your containers. The worker nodes are entirely managed by the control plane, which tells each worker node what containers it should run.

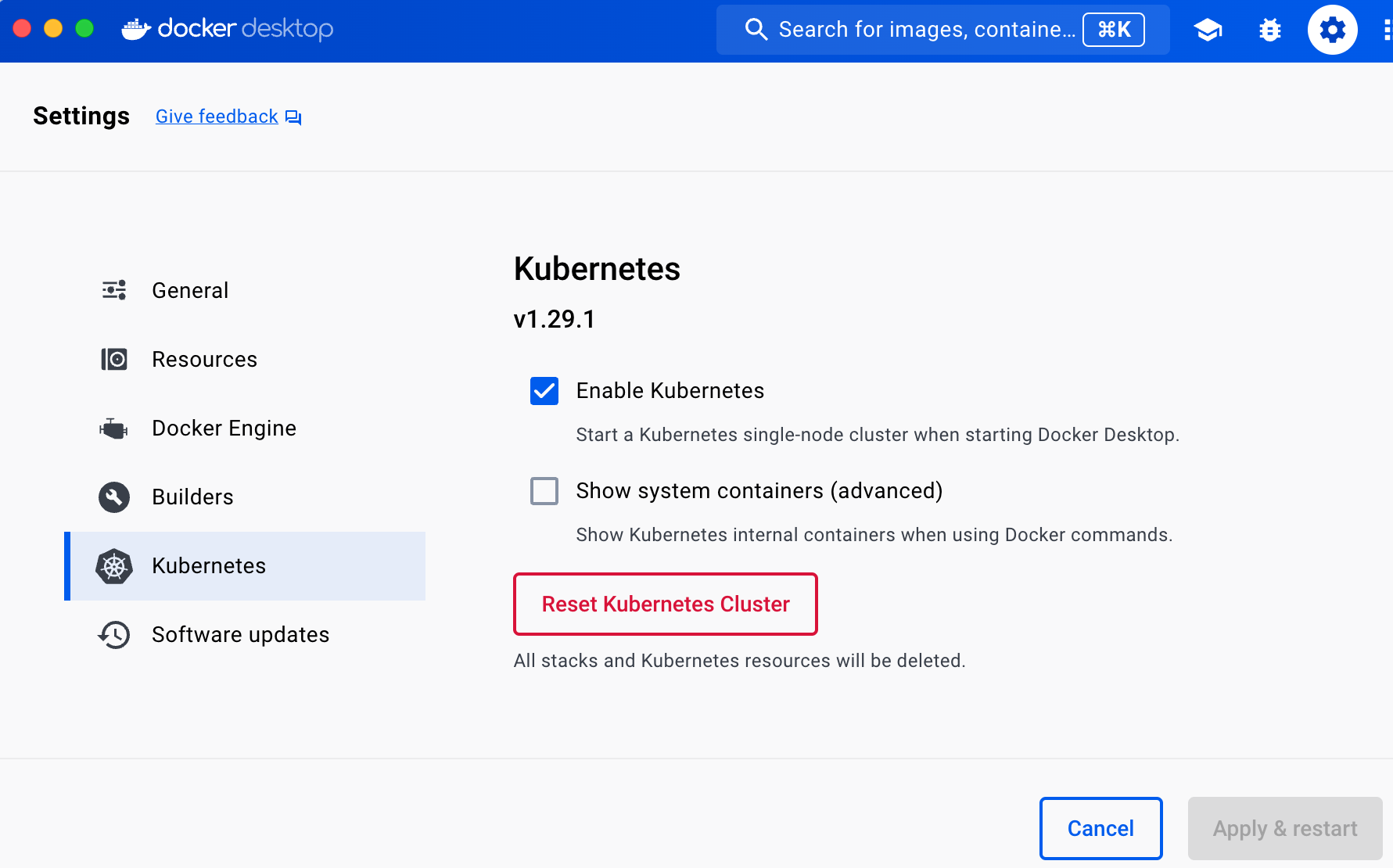

Kubernetes is open source, and one of its strengths is that you can run it anywhere: in any public cloud (e.g., AWS, Azure, GCP), in your own datacenter, and even on your personal computer. A little later in this blog post, I’ll show you how you can run Kubernetes in the cloud (in AWS), but for now, let’s start small and run it locally. This is easy to do if you installed a relatively recent version of Docker Desktop, as it has the ability to fire up a Kubernetes cluster locally with just a few clicks.

If you open Docker Desktop’s preferences on your computer, you should see Kubernetes in the nav, as shown in Figure 27.

If it’s not enabled already, check the Enable Kubernetes checkbox, click Apply & Restart, and wait a few minutes

for that to complete. In the meantime, follow the instructions on the

Kubernetes website to install kubectl, which is the command-line tool for

interacting with Kubernetes.

To use kubectl, you must first update its configuration file, which lives in $HOME/.kube/config (that is, the

.kube folder of your home directory), to tell it what Kubernetes cluster to connect to. Conveniently, when you enable

Kubernetes in Docker Desktop, it updates this config file for you, adding a docker-desktop entry to it, so all you

need to do is tell kubectl to use this configuration as follows:

$ kubectl config use-context docker-desktop

Switched to context "docker-desktop".Now you can check if your Kubernetes cluster is working with the get nodes command:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

docker-desktop Ready control-plane 2m31s v1.29.1The get nodes command shows you information about all the nodes in your cluster. Since you’re running Kubernetes

locally, your computer is the only node, and it’s running both the control plane and acting as a worker node. You’re

now ready to run some Docker containers!

To deploy something in Kubernetes, you create Kubernetes objects, which are persistent entities you write to the Kubernetes cluster (via the API server) that record your intent: e.g., your intent to have specific Docker images running. The cluster runs a reconciliation loop, which continuously checks the objects you stored in it and works to make the state of the cluster match your intent.

There are many different types of Kubernetes objects available. The one we’ll use to deploy your sample app is a Kubernetes Deployment, which is a declarative way to manage an application in Kubernetes. The Deployment allows you to declare what Docker images to run, how many copies of them to run (replicas), a variety of settings for those images (e.g., CPU, memory, port numbers, environment variables), and so on, and the Deployment will then work to ensure that the requirements you declared are always met.

One way to interact with Kubernetes is to create YAML files to define your Kubernetes objects, and to use the

kubectl apply command to submit those objects to the cluster. Create a new folder called kubernetes to store these

YAML files:

$ cd fundamentals-of-devops

$ mkdir -p ch3/kubernetes

$ cd ch3/kubernetesWithin the kubernetes folder, create a file called sample-app-deployment.yml with the contents shown in Example 49:

apiVersion: apps/v1

kind: Deployment (1)

metadata: (2)

name: sample-app-deployment

spec:

replicas: 3 (3)

template: (4)

metadata: (5)

labels:

app: sample-app-pods

spec:

containers: (6)

- name: sample-app (7)

image: sample-app:v1 (8)

ports:

- containerPort: 8080 (9)

env: (10)

- name: NODE_ENV

value: production

selector: (11)

matchLabels:

app: sample-app-podsThis YAML file gives you a lot of functionality for just ~20 lines of code:

| 1 | The kind keyword specifies that this is Kubernetes object is a Deployment. |

| 2 | Every Kubernetes object includes metadata that can be used to identify and target that object in API calls. Kubernetes makes heavy use of metadata and labels to keep the system highly flexible and loosely coupled. The preceding code sets the name of the Deployment to "sample-app-deployment." |

| 3 | The Deployment will run 3 replicas. |

| 4 | This is the pod template—the blueprint—that defines what this Deployment will deploy and manage. It’s similar to

the launch template you saw with AWS ASGs. In Kubernetes, instead of deploying one container at a time, you deploy

pods, which are groups of containers that are meant to be deployed together. For example, you could have a pod

with one container to run a web app (e.g., the sample app) and another container that gathers metrics on the web

app and sends them to a central service (e.g., Datadog). So this template block allows you to configure your

pods, specifying what container(s) to run, the ports to use, environment variables to set, and so on. |

| 5 | Templates can be used separately from Deployments, so they have separate metadata which allows you to identify and target that template in API calls (this is another example of Kubernetes trying to be highly flexible and decoupled). The preceding code sets the "app" label to "sample-app-pods." You’ll see how this is used shortly. |

| 6 | Inside the pod template, you define one or more containers to run in that pod. |

| 7 | This simple example configures just a single container to run, giving it the name "sample-app." |

| 8 | The Docker image to run for this container. This is the Docker image you built earlier in the post. |

| 9 | This tells Kubernetes that the Docker image listens for requests on port 8080. |

| 10 | The env configuration lets you set environment variables for the container. The preceding code sets the

NODE_ENV environment variable to "production" to tell the Node.js app and all its dependencies to run in

production mode. |

| 11 | The selector block tells the Kubernetes Deployment what to target: that is, which pod template to deploy

and manage. Why doesn’t the Deployment just assume that the pod defined within that Deployment is the one you want

to target? Because Deployments and templates can be defined completely separately, so you always need to specify a

selector to tell the Deployment what to target (this is yet another example of Kubernetes trying to be

flexible and decoupled). |

You can use the kubectl apply command to apply your Deployment configuration:

$ kubectl apply -f sample-app-deployment.yml

deployment.apps/sample-app-deployment createdThis command should complete very quickly. How do you know if it actually worked? To answer that question, you can

use kubectl to explore your cluster. First, run the get deployments command, and you should see your Deployment:

$ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

sample-app-deployment 3/3 3 3 1mHere, you can see how Kubernetes uses metadata, as the name of the Deployment (sample-app-deployment) comes from your

metadata block. You can use that metadata in API calls yourself. For example, to get more details about a specific

Deployment, you can run describe deployment <NAME>, where <NAME> is the name from the metadata:

$ kubectl describe deployment sample-app-deployment

Name: sample-app-deployment

CreationTimestamp: Mon, 15 Apr 2024 12:28:19 -0400

Selector: app=sample-app-pods

Replicas: 3 desired | 3 updated | 3 total | 3 available

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 0 max unavailable, 3 max surge

(... truncated for readability ...)This Deployment is reporting that all 3 replicas are available. To see those replicas, run the get pods command:

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

sample-app-deployment-64f97797fb-hcskq 1/1 Running 0 4m23s

sample-app-deployment-64f97797fb-p7zjk 1/1 Running 0 4m23s

sample-app-deployment-64f97797fb-qtkl8 1/1 Running 0 4m23sAnd to get the details about a specific pod, copy its name, and run describe pod:

$ kubectl describe pod sample-app-deployment-64f97797fb-hcskq

Name: sample-app-deployment-64f97797fb-hcskq

Node: docker-desktop/192.168.65.3

Start Time: Mon, 15 Apr 2024 14:08:04 -0400

Labels: app=sample-app-pods

pod-template-hash=64f97797fb

Status: Running

IP: 10.1.0.31

Controlled By: ReplicaSet/sample-app-deployment-64f97797fb

Containers:

sample-app:

Image: sample-app:v1

Port: 8080/TCP

Host Port: 0/TCP

(... truncated for readability ...)From this output, you can see the containers that are running for each pod, which in this case, is just one container per pod running the sample-app:v1 Docker image you built earlier.